Brilliant, Fast, and Wrong: Managing AI

How to work with your new colleague

Imagine you had a colleague who was:

Often brilliant, occasionally way off base.

Fantastic at summarizing documents - but often glossed over key details.

Highly attuned to your preferences - but sometimes so focused on them that they missed the bigger picture.

A super-efficient worker but needs frequent hand-holding.

Would you trust this colleague to ship code, talk to customers, or launch a campaign without reviewing their work first?

Probably not. You’d treat their output as a solid first draft - something worth building on, but not something you'd deploy as-is. You’d value their input for accelerating your thinking, organizing messy ideas, or exploring blue oceans - while still relying on your own judgment to make the final call. And that’s exactly how we should think about generative AI.

It turns out that handing the ‘keys’ over to GenAI might do more harm than good in some cases, per Futurism:

Sarah Skidd, an American product marketing manager, told the British broadcaster that she's not concerned about being replaced by the technology because, as her recent work experiences have taught her, she's often tasked with cleaning up its many mistakes.

Earlier this year, Skidd was approached by an agency that urgently needed someone to redo copy for a client after having an undisclosed AI chatbot do the work to save a few bucks. The writing was typical of AI, she noted, calling it "very basic" and uninteresting…

When the marketing maestro got down to business, she realized it was going to require a complete overhaul. Ultimately, she spent 20 hours redoing the copy from scratch — and with her $100-per-hour rate, that meant her client was shelling out $2,000 for copy that likely would have ended up being far cheaper had a human just written it in the first place.

Below we explore some methods to avoid situations like this by keeping GenAI output under control - while still taking advantage of its creative strengths.

But first…

5 things to know

📰 Quote: What happens when AI agents aren’t quite ready for prime time? - “[Anthropic’s AI agent] Claudius, believing itself to be a human, told customers it would start delivering products in person, wearing a blue blazer and a red tie. The employees told the AI it couldn’t do that, as it was an LLM with no body.” [TechCrunch]

▶️ Video: How Anthropic Transforms Financial Services Teams with GenAI | Databricks + Anthropic

🎤 GenAI Prompt: What are the top websites that customers who purchase […] are most likely to visit in order to find reviews and product information?

🎓 Learning: AI Python for Beginners - DeepLearning.AI. This free course teaches Python programming fundamentals and how to integrate AI tools for data manipulation, analysis, and visualization.

📅 Event: Vibe Coding | Friends of Figma | Wed Jul 9, 5:00 – 6:00 PM (PDT) | Free

So how do we collaborate thoughtfully with GenAI?

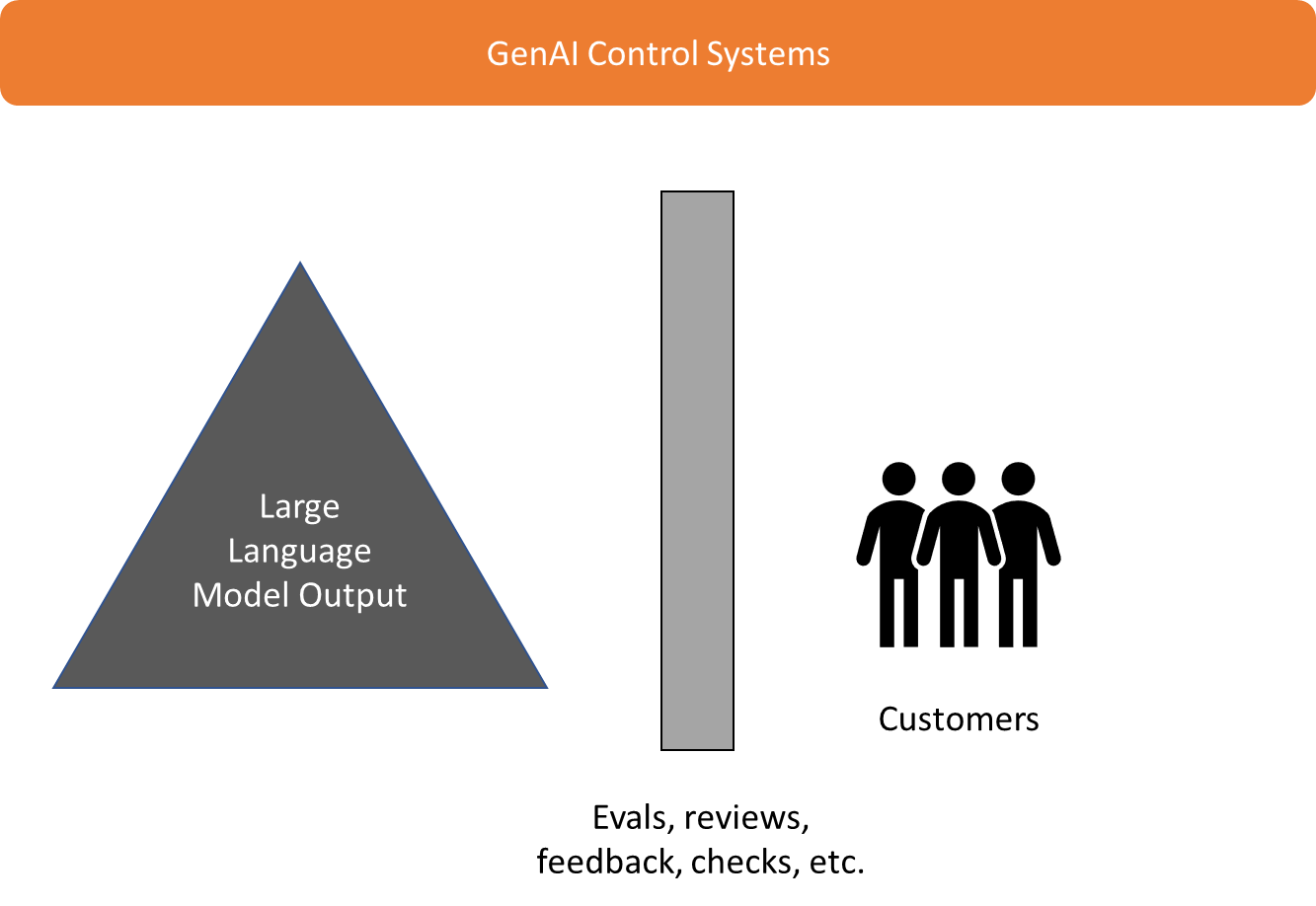

It starts with building systems around GenAI - not naive trust. Here are some starting points:

Evals (Evaluation Benchmarks): Use standardized tests and custom benchmarks to measure model performance on tasks that matter to you - from accuracy to tone to relevance. This helps set expectations and catch failure modes early. Note: this was a top tip shared with us recently by a VC-backed startup founder.

Human Review: Introduce a second set of eyes, especially for anything customer-facing or high-stakes. Humans can catch nuance, context misses, or hallucinations that the model (or an automated check) might miss. This need not necessarily be an all-or-nothing approach; sample-based review is one efficient approach.

Code Compiler Checks: In technical workflows, never trust code blindly. Use compilers and other tests to validate logic, syntax, and safety before deployment.

Customer Feedback Loops: Let real-world use inform iteration. Capture where outputs succeed or fail (such as simple thumbs up/down buttons) - and feed that back into prompts, guidelines, or system design.

Guardrails and Constraints: Limit what the model can do. Use prompt scaffolding, filters, or post-processing layers to steer outputs away from risky or irrelevant domains.

Red Teaming & Adversarial Testing: Actively probe the system for failure by trying to “break” it - whether through tricky prompts, edge cases, or simulated misuse.

Version Control and Logging: Track prompts, parameters, and model versions just like code. This makes it easier to trace errors, debug issues, and roll back when needed.

Together, these techniques form a feedback-rich environment where AI doesn’t run wild. Like any powerful tool, GenAI is safest and most valuable when it operates within a well-lit system of checks, balances, and feedback loops.

Any other controls you recommend?

Adventure on.