Turn up the temperature

Entropy, chocolate chip cookies, and more...

Howdy fellow adventurers,

I suppose that I’ve become close enough with ChatGPT that it felt comfortable telling me that my chocolate chip cookie recipe is boring (aka very statistically predictable). So can GenAI come up with more creative ideas, whether for baking or text generation? We decided to put the LLMs to the test, varying the temperature setting of our prompts.

To continue the analogy, imagine you needed to choose ingredients to complete your cookie recipe. How would you decide which ingredients to pick to elevate your recipe to Showstopper status? The approach you take is directly related to the GenAI concept of temperature. For example:

Low Temperature Ideas: You play it safe, selecting ingredients that are commonly found in existing recipe books.

High Temperature Ideas: This is where things get interesting. Imagine you had access to a whole cupboard of exotic ingredients (and maybe even some made-up ones too). You explore a wider range of options, picking a less common ingredient.

We’ve been experimenting with writing prompts with varying degrees of temperature - and it’s really impacted how we think about prompting for creativity. Thankfully, ChatGPT (as well as several other LLMs) have some suggestions for turning ideas (including boring chocolate chip cookies) up to 11.

If you enjoy this issue, please pass it along to a friend or colleague!

In this issue:

Temperature - dialing up the creativity

Spotlight: The 3A’s framework for GenAI ‘quick wins’

What we’re reading this week

Temperature - dialing up the creativity

Within large language models (LLMs) such as ChatGPT, temperature isn't about preheating ovens – it's about controlling the creativity and unpredictability of AI-generated text. The term originates from thermodynamics, where higher temperature leads to increased entropy, or randomness, within systems.

When an LLM writes, it ‘guesses’ the most likely next token (think of a token as a small unit of text, such as a word or phrase). Temperature controls how strictly the AI follows its top guess. Low temperature means always picking the most probable word, while high temperature allows for more surprising and creative word choices. Google Cloud explains the impact on response quality here:

Temperature controls the degree of randomness in token selection. Lower temperatures are good for prompts that require a less open-ended or creative response, while higher temperatures can lead to more diverse or creative results. A temperature of

0means that the highest probability tokens are always selected. In this case, responses for a given prompt are mostly deterministic, but a small amount of variation is still possible.If the model returns a response that's too generic, too short, or the model gives a fallback response, try increasing the temperature.

The temperature range for many LLMs is 0-1, although some models span from 0-2. A key distinction to make when choosing a temperature level for a specific prompt is whether you care more about accuracy or creativity:

If accuracy matters more - use the default or even lower temperature values (<0.5). Examples include technical writing, code generation, question answering, customer-facing interactions.

If creativity matters more - experiment with using higher values (>0.5). Examples include creative writing, marketing content generation, brainstorming.

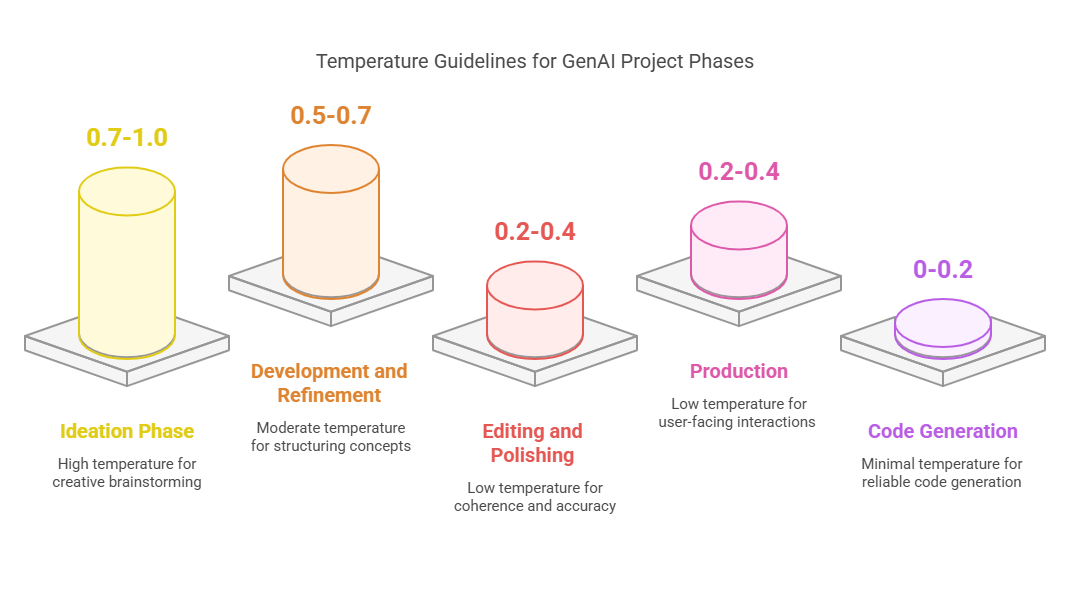

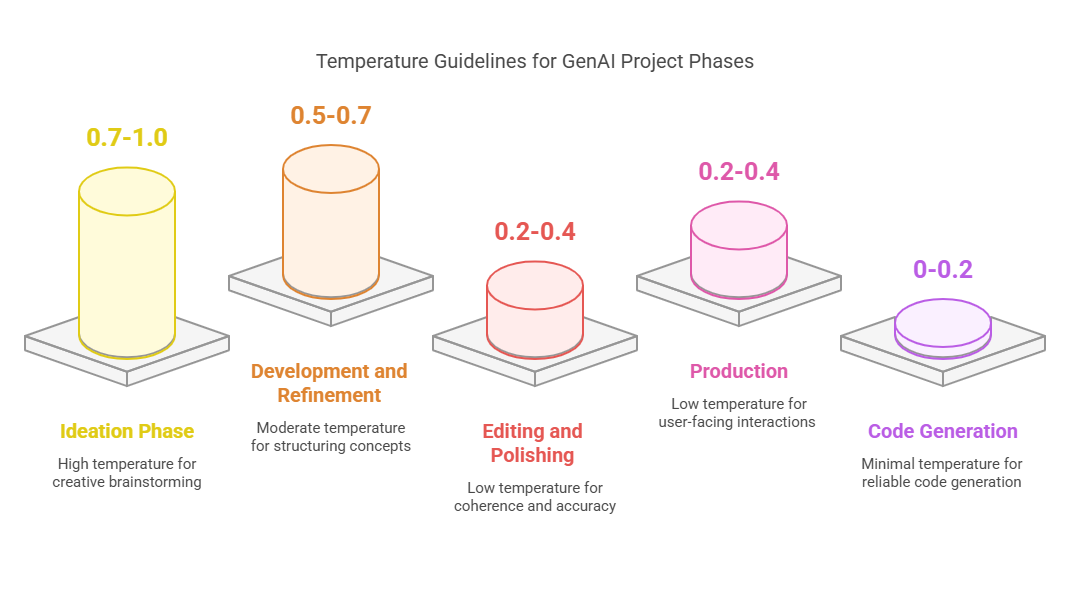

You can also vary the temperature levels over the course of a project. Start with high values to brainstorm ideas, then switch to lower levels as you develop, refine, and edit your work. See the below framework we developed leveraging Perplexity and the OpenAI Developer Community for guidance on when to use different temperature values across your project timeline:

So how do you actually modify temperature?

If you’re a developer, it’s easy. You can control temperature directly if you’re interacting with an LLM inside a developer studio environment or plugging into a GenAI API.

Unfortunately, chat-based LLM interfaces such as ChatGPT.com don’t usually include the ability to directly modify temperature. So, one simple approach is selecting models that naturally have default temperature values aligned with your specific use case. We asked each LLM for their default values; unfortunately, there isn’t much variation across temperature so there are no clear LLMs to pick for very creative or very accurate tasks. Interestingly, the models currently seem to mostly favor temperature settings that yield more creative output, despite the higher risk of model hallucination. Examples below:

ChatGPT: “I’d estimate my default temperature today is around 0.7 [on a 0-1 scale], which is my usual setting. This means my responses balance creativity and coherence.”

Claude: “My responses naturally aim to be balanced - neither overly rigid nor overly creative - while remaining helpful and appropriate to the context.”

Gemini 1.5 Flash: “I'd grade my current temperature setting around 0.7 [on a 0-1 scale]. This allows me to generate creative and informative responses while maintaining a good level of coherence and relevance.”

Perplexity: “Based on my current configuration, I estimate my temperature to be around 0.5-0.6 on a 0-1 scale.”

Thankfully, there is a simple alternate solution - just add the specified temperature to your prompt, as today’s LLMs are smart enough to simulate the effects of whatever temperature you throw at it. And due to the simulation effect, we can even input temperature values beyond the formal upper ranges, and the LLMs will interpret those values as ‘extreme creativity’ scores.

ChatGPT.com’s advice for modifying temperature makes this very straightforward:

You can guide me by explicitly stating how much creativity or variability you want in my responses. Here’s how:

Specify a Temperature Range:

“Give me a response with a low temperature (0.25), focusing on well-known facts.”

“Use a high temperature (0.95) and make the response as creative and speculative as possible.”

So, which values should we use? Feel free to specify any temperature value you’d like, but here are some suggested levels with descriptions provided by ChatGPT:

Low temperature (e.g., 0.25) → More predictable, fact-based, and structured responses. The AI sticks to widely accepted answers and minimizes speculation.

Medium temperature (e.g., 0.5) → A balanced mix of creative and reliable responses. The AI introduces some variation, but is still reasonably grounded.

High temperature (e.g., 0.75) → More diverse, unexpected, and exploratory responses. The AI takes more risks and suggests less conventional ideas.

Very high temperature (e.g., 1.0 or beyond) → Highly creative, speculative, and freeform responses. The AI might generate imaginative or even surreal ideas.

Let’s see how much more creative we can be when we ask a single question but vary the temperature. Below is our prompt, plus condensed responses from several LLMs:

Prompt: Complete this sentence at different temperature levels: 0.25, 0.5, 0.75, 1.0, 2,0. Sentence: "The most profitable investment opportunity within GenAI right now is..."

ChatGPT:

Enterprise AI solutions for automation, customer support, and data analysis [0.25]

AI-as-a-Service (AIaaS) for applications in healthcare, finance, & marketing [0.50]

Synthetic data generation [0.75]

Hyper-personalized AI companions/assistants [1.0]

Sentient AI that generates fully autonomous companies from scratch [2.0]

And here’s Claude’s responses:

Enterprise API integration solutions (sectors like finance and healthcare) [0.25]

Specialized AI training and prompt engineering tools to help businesses [0.50]

Platforms that enable collaboration between domain experts and AI systems [0.75]

Autonomous AI agents that can solve complex business problems [1.0]

Bio-digital hybrid systems that merge organic neural networks with AI [2.0]

Note that as the temperature increases, the tone of the text moves from:

Factual → Imaginative

Proven → Experimental

Practical → Theoretical

Safe → Risky

Predictable → Less Expected

Finally, to explore a more tangible example, we next asked ChatGPT and Claude to improve our chocolate chip cookies:

Prompt: “Answer this question at different temperature levels: 0.25, 0.5, 0.75, 1.0, 2.0. What one additional ingredient would greatly improve a standard recipe for chocolate chip cookies?”

ChatGPT shared increasingly fascinating - and ultimately made up - suggestions:

Browned butter (adds a rich, nutty flavor) [0.25]

Espresso powder (deepens the chocolate taste) [0.50]

Miso paste (adds an umami depth) [0.75]

Crushed potato chips (adds an addictive salty crunch) [1.0]

Carbonated honey pearls that fizz and pop in your mouth [2.0]

Claude offered very similar responses - along with perhaps our favorite suggestions (rose petals and ionized mountain air):

Espresso powder (enhances the chocolate flavor) [0.25]

Browned butter (creates a nutty, caramel undertone) [0.50]

Miso paste (amplifies the sweetness while adding a subtle savory complexity) [0.75]

Crushed dried rose petals (creates an unexpected aromatic dimension) [1.0]

Powdered cricket flour and essence of ionized mountain air [2.0]

Model comparison note: FWIW, we noticed that Perplexity’s standard model responses tended to be more predictable/practical than either ChatGPT or Claude’s at identical temperature levels. As an example, Perplexity recommended candied violets or rose petals as its 2.0 idea vs. 1.0 for Claude): “Temperature 2.0: The most outlandish ingredient to transform chocolate chip cookies could be edible flowers, like candied violets or rose petals.”

Although our tests were not exhaustive, they support Perplexity’s claim of a lower default temperature score. This suggests that Perplexity might be a good LLM for lower-temperature work requiring more focus on realism and accuracy.

And for the record - we’re planning to make a couple of these variations and will report back next week. Know where we can find any ionized mountain air essence?

Spotlight

The 3A framework for GenAI 'quick wins' [Yemi Olagbaiye | Tech Informed]

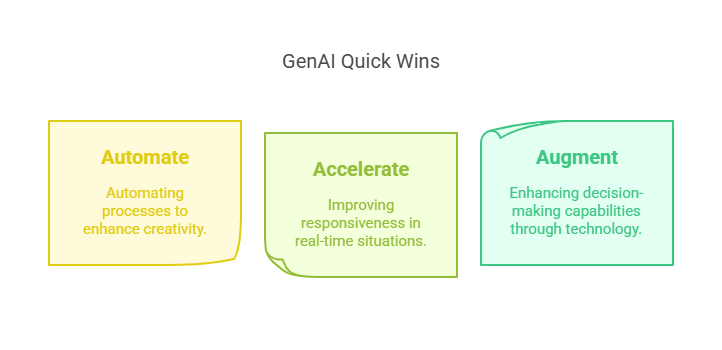

Launching AI solutions can be complex and overwhelming, but it doesn’t need to be. The 3A’s framework – Automate, Accelerate, and Augment – is designed to help SMBs achieve low-cost, low-risk AI results within a timeline of 1-2 years:

Key steps:

Automate to free up human creativity: Identify high-volume, low-complexity tasks that can be automated with GenAI. Think scheduling, doc review, FAQs.

Accelerate real-time responsiveness: Leverage GenAI to reduce the time and effort needed to deliver services, especially where speed-to-market is critical. Think customized shopping lists and product recommendations.

Augment for ultimate decision power: Use GenAI-generated insights and outlooks to enhance human decision-making in areas such as planning and forecasting. Think expert advice, prediction, error-checking.

What we’re reading this week

“LLMs are fundamentally matching the patterns they’ve seen, and their abilities are constrained by mathematical boundaries. Embedding tricks and chain-of-thought prompting simply extends their ability to do more sophisticated pattern matching. The mathematical results imply that you can always find compositional tasks whose complexity lies beyond a given system’s abilities.”

LLMs Face Their Limitations [Quanta Magazine]

“To understand the latest advancements in generative AI, imagine a courtroom.

Judges hear and decide cases based on their general understanding of the law. Sometimes a case — like a malpractice suit or a labor dispute — requires special expertise, so judges send court clerks to a law library, looking for precedents and specific cases they can cite.

Like a good judge, large language models (LLMs) can respond to a wide variety of human queries. But to deliver authoritative answers — grounded in specific court proceedings or similar ones — the model needs to be provided that information.

The court clerk of AI is a process called retrieval-augmented generation, or RAG for short.”

Overview of Retrieval-Augmented Generation (RAG) [NVIDIA]

Adventure on.